参考文档:

https://www.cnblogs.com/lizexiong/p/15582280.html

https://github.com/prometheus-operator/kube-prometheus

一、概述

对于 k8s 集群的监控,本文采用 Prometheus 进行监控采集,再使用 Grafana 来进行数据可视化展示。

1、采集方案

- kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、kube-proxy 这些服务自身有暴露 /metrics 接口,prometheus 可以通过抓取这些接口来获取 k8s 集群相关的指标数据。

- cadvisor 采集容器、Pod 相关的性能指标数据,暴露 /metrics 给 prometheus 抓取。

- kube-state-metrics 采集 k8s 资源对象的状态指标数据,暴露 /metrics 给 prometheus 抓取。

- prometheus-node-exporter 采集主机的性能指标数据,暴露 /metrics 给 prometheus 抓取。

- prometheus-blackbox-exporter 采集应用的网络性能(http、tcp、icmp 等)数据,暴露 /metrics 给 prometheus 抓取。

- 应用程序自己采集状态暴露指标,并添加约定的 annotation,prometheus 根据 annotation 实现抓取。

2、服务发现

官方文档:https://prometheus.io/docs/prometheus/latest/configuration/configuration/#kubernetes_sd_config Prometheus 支持基于 Kubernetes 的服务发现,通过使用 kubernetes_sd_config,允许从 Kubernetes 的 REST API 检索抓取目标,并始终与集群状态保持同步,从而动态的发现 Kubernetes 中部署的所有可监控的目标资源。

可以通过配置 role 来发现不同类型的目标,role 可配置为 node、service、pod、endpoints、endpointslice、ingress。

例如,指定 kubernetes_sd_config 的 role 为 node,Prometheus 会自动从 Kubernetes 中发现到所有的 node 节点并作为当前 Job 的 Target 实例。不过是需要指定访问 Kubernetes API 的 CA 证书以及 Token 访问令牌文件路径。

3、约定注释

我们可以在 k8s 中约定注释,约定带指定 annotation 应用是自主暴露监控指标,Prometheus 可以根据这些 annotation 实现抓取以及分组。这个是自行约定的,我常用的如下:

# 端口

prometheus.io/port: '8888'

# 协议

prometheus.io/scheme: http

# 指标路径

prometheus.io/path: /metrics

# 环境

prometheus.io/env: test

# 项目名称

prometheus.io/org: xxx

# 应用名称

prometheus.io/app: xxx

# 是否抓取

prometheus.io/scraped: true

# 是否探测

prometheus.io/probed: true

二、Prometheus 部署

创建 rbac,使得 prometheus 可以访问 k8s 集群内部的资源和访问 /metrics 路径抓取数据。

prometheus-rbac.yaml:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "watch", "list"]

- apiGroups: ["extensions"]

resources:

- ingresses

verbs: ["get", "watch", "list"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: monitor

labels:

app: prometheus

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: monitor

roleRef:

kind: ClusterRole

name: prometheus

apiGroup: rbac.authorization.k8s.io创建包含 prometheus 配置的 configmap,其它配置后面逐一增加,现在是最基本配置。

prometheus-conf-configmap.yaml:

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-conf

namespace: monitor

labels:

app: prometheus

data:

prometheus.yml: |-

# 全局配置

global:

scrape_interval: 30s # 抓取数据的时间间隔将,默认为 1 分钟。

evaluation_interval: 30s # 评估规则的的频率,默认值为 1 分钟。

alerting: # 报警配置,配置 alertmanagers 地址。

alertmanagers:

- static_configs:

- targets:

rule_files: # 规则文件配置,用来指定包含记录规则或者警报规则的文件列表。

scrape_configs: # 抓取配置。用来指定 Prometheus 抓取数据的目标。

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']创建包含报警规则的 configmap,后面再添加具体报警规则。prometheus-rule-configmap.yaml:

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-rules

namespace: monitor

labels:

app: prometheus

data:

cpu-load.rule: |

groups:

- name: example

rules:

- alert: example创建 pv 和 pvc,用于 prometheus 的数据持久化,因为环境是单节点直接采用 local 类型的 pv。

prometheus-local-pv.yaml:

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-pv

labels:

app: prometheus

spec:

capacity:

storage: 20Gi

accessModes: ["ReadWriteOnce"]

persistentVolumeReclaimPolicy: Retain

storageClassName: local

local:

path: /data/prometheus

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cp

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus-pvc

namespace: monitor

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 20Gi

storageClassName: local

selector:

matchLabels:

app: prometheus创建 service。prometheus-service.yaml:

kind: Service

apiVersion: v1

metadata:

name: prometheus

namespace: monitor

labels:

app: prometheus

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 30002

selector:

app: prometheus创建 deployment。prometheus-deployment.yaml:

kind: Deployment

apiVersion: apps/v1

metadata:

name: prometheus

namespace: monitor

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

serviceAccountName: prometheus

containers:

- name: prometheus

image: prom/prometheus:v2.36.2

args:

- "--config.file=/etc/prometheus/prometheus.yml" # 指定配置文件

- "--storage.tsdb.path=/prometheus" # 指定 tsdb 数据存储的路径

- "--storage.tsdb.retention=15d" # 采集数据的保存时间,默认15天

- "--storage.tsdb.no-lockfile" # 不在数据目录中创建 lockfile 文件

- "--web.enable-lifecycle" # 支持热加载,例:curl -X POST localhost:9090/-/reload

- "--web.console.libraries=/usr/share/prometheus/console_libraries"

- "--web.console.templates=/usr/share/prometheus/consoles"

volumeMounts:

- mountPath: /prometheus

name: prometheus-data-volume

- mountPath: /etc/prometheus/prometheus.yml

name: prometheus-conf-volume

subPath: prometheus.yml

- mountPath: /etc/prometheus/rules

name: prometheus-rules-volume

ports:

- containerPort: 9090

protocol: TCP

volumes:

- name: prometheus-data-volume

persistentVolumeClaim:

claimName: prometheus-pvc

- name: prometheus-conf-volume

configMap:

name: prometheus-conf

- name: prometheus-rules-volume

configMap:

name: prometheus-rules

# prometheus 镜像中指定的用户为 nonobody(65534),进程运行使用的也是此用户。

# 配置此设置目的在于解决挂载目录的权限问题。

securityContext:

runAsUser: 65534

runAsGroup: 65534

fsGroup: 65534

runAsNonRoot: true指定 ServiceAccountName 创建的 Pod 实例中,会自动将用于访问 Kubernetes API 的 CA 证书以及当前账户对应的访问令牌文件挂载到 Pod 实例的 /var/run/secrets/kubernetes.io/serviceaccount/ 目录下。

三、node-export 部署

采用 DaemonSet 来部署 node-export,保证每个节点上都运行一个来采集主机的性能指标数据。

node-exporter.yaml:

apiVersion: v1

kind: Service

metadata:

name: node-exporter

namespace: monitor

labels:

app: node-exporter

annotations:

prometheus.io/app: "node-exporter"

prometheus.io/port: '9100'

prometheus.io/path: '/metrics'

spec:

ports:

- name: node-exporter

port: 9100

targetPort: 9100

selector:

app: node-exporter

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitor

labels:

app: node-exporter

spec:

selector:

matchLabels:

app: node-exporter

template:

metadata:

labels:

app: node-exporter

spec:

containers:

- name: node-exporter

image: prom/node-exporter:v1.3.1

args:

- --web.listen-address=:9100

- --path.sysfs=/host/sys

- --path.rootfs=/host/root

- --no-collector.wifi

- --no-collector.hwmon

- --collector.filesystem.mount-points-exclude=^/(dev|proc|sys|run/k3s/containerd/.+|var/lib/docker/.+|var/lib/kubelet/pods/.+)($|/)

- --collector.netclass.ignored-devices=^(veth.*|[a-f0-9]{15})$

- --collector.netdev.device-exclude=^(veth.*|[a-f0-9]{15})$

resources:

limits:

cpu: 250m

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- SYS_TIME

drop:

- ALL

readOnlyRootFilesystem: true

volumeMounts:

- mountPath: /host/sys

mountPropagation: HostToContainer

name: sys

readOnly: true

- mountPath: /host/root

mountPropagation: HostToContainer

name: root

readOnly: true

hostNetwork: true

hostPID: true

securityContext:

runAsNonRoot: true

runAsUser: 65534

volumes:

- hostPath:

path: /sys

name: sys

- hostPath:

path: /

name: root

updateStrategy:

rollingUpdate:

maxUnavailable: 10%

type: RollingUpdate

四、blackbox-exporter

部署 blackbox-exporter 对 Ingress 和 Service 进行网络探测。blackbox-exporter.yaml:

apiVersion: v1

kind: ConfigMap

metadata:

name: blackbox-exporter-configuration

namespace: monitor

labels:

app: blackbox-exporter

data:

config.yml: |-

"modules":

"http_2xx":

"http":

"preferred_ip_protocol": "ip4"

"prober": "http"

"http_post_2xx":

"http":

"method": "POST"

"preferred_ip_protocol": "ip4"

"prober": "http"

"irc_banner":

"prober": "tcp"

"tcp":

"preferred_ip_protocol": "ip4"

"query_response":

- "send": "NICK prober"

- "send": "USER prober prober prober :prober"

- "expect": "PING :([^ ]+)"

"send": "PONG ${1}"

- "expect": "^:[^ ]+ 001"

"pop3s_banner":

"prober": "tcp"

"tcp":

"preferred_ip_protocol": "ip4"

"query_response":

- "expect": "^+OK"

"tls": true

"tls_config":

"insecure_skip_verify": false

"ssh_banner":

"prober": "tcp"

"tcp":

"preferred_ip_protocol": "ip4"

"query_response":

- "expect": "^SSH-2.0-"

"tcp_connect":

"prober": "tcp"

"tcp":

"preferred_ip_protocol": "ip4"

---

apiVersion: v1

kind: Service

metadata:

name: blackbox-exporter

namespace: monitor

labels:

app: blackbox-exporter

spec:

ports:

- name: http

port: 9115

targetPort: 9115

selector:

app: blackbox-exporter

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: blackbox-exporter

namespace: monitor

labels:

app: blackbox-exporter

spec:

replicas: 1

selector:

matchLabels:

app: blackbox-exporter

template:

metadata:

labels:

app: blackbox-exporter

spec:

containers:

- name: blackbox-exporter

image: prom/blackbox-exporter:v0.21.1

args:

- --config.file=/etc/blackbox_exporter/config.yml

- --web.listen-address=:9115

ports:

- containerPort: 9115

name: http

resources:

limits:

cpu: 20m

memory: 40Mi

requests:

cpu: 10m

memory: 20Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 65534

volumeMounts:

- mountPath: /etc/blackbox_exporter/

name: config

readOnly: true

- name: module-configmap-reloader

image: jimmidyson/configmap-reload:v0.5.0

args:

- --webhook-url=http://localhost:9115/-/reload

- --volume-dir=/etc/blackbox_exporter/

resources:

limits:

cpu: 20m

memory: 40Mi

requests:

cpu: 10m

memory: 20Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 65534

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: FallbackToLogsOnError

volumeMounts:

- mountPath: /etc/blackbox_exporter/

name: config

readOnly: true

volumes:

- configMap:

name: blackbox-exporter-configuration

name: config

五、kube-state-metrics

官方文档:https://github.com/kubernetes/kube-state-metrics

kube-state-metrics(KSM)) 用于 k8s 资源对象的监控,如 deployments、nodes 和 pods 等。

[root@cp ~]# wget https://github.com/kubernetes/kube-state-metrics/archive/refs/tags/v2.5.0.tar.gz

[root@cp ~]# tar -xf kube-state-metrics-2.5.0.tar.gz

[root@cp ~]# cd kube-state-metrics-2.5.0/examples/standard/

[root@cp standard]# ls

cluster-role-binding.yaml cluster-role.yaml deployment.yaml service-account.yaml service.yaml

[root@cp standard]# kubectl apply -f .

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

serviceaccount/kube-state-metrics created

service/kube-state-metrics created

[root@cp ~]# kubectl get pod -n kube-system kube-state-metrics-58c5984f79-zs7rz -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-state-metrics-58c5984f79-zs7rz 1/1 Running 0 25m 10.244.0.147 cp <none> <none>

[root@cp ~]# kubectl get svc -n kube-system kube-state-metrics

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-state-metrics ClusterIP None <none> 8080/TCP,8081/TCP 26m

[root@cp ~]# curl -s 10.244.0.147:8080/metrics

# 两个端口用途

8080: 用于公开 kubernetes 的指标数据的端口

8081: 用于公开自身 kube-state-metrics 的指标数据的端口

六、指标采集

官方文档:https://prometheus.io/docs/prometheus/latest/configuration/configuration/#kubernetes_sd_config

官方示例:https://github.com/prometheus/prometheus/blob/release-2.36/documentation/examples/prometheus-kubernetes.ymlapiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-conf

namespace: monitor

labels:

app: prometheus

data:

prometheus.yml: |-

# 全局配置

global:

scrape_interval: 30s # 抓取数据的时间间隔将,默认为 1 分钟。

evaluation_interval: 30s # 评估规则的的频率,默认值为 1 分钟。

alerting: # 报警配置,配置 alertmanagers 地址。

alertmanagers:

- static_configs:

- targets:

rule_files: # 规则文件配置,用来指定包含记录规则或者警报规则的文件列表。

scrape_configs:

- job_name: 'prometheus'

# metrics_path 默认为 '/metrics'

# scheme 默认为 'http'

static_configs:

- targets: ['localhost:9090']

# kube-state-metrics 的抓取配置

- job_name: 'kube-state-metrics'

static_configs:

- targets: ['kube-state-metrics.kube-system.svc.cluster.local:8080','kube-state-metrics.kube-system.svc.cluster.local:8081']

# node-exporter 的抓取配置

- job_name: "node-exporter"

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

# 仅抓取 annotation 含 prometheus.io/app 并且值为 node-exporter 的 Service。

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_app]

action: keep

regex: node-exporter

# 如果 Service annotation 指定了 prometheus.io/scheme,则替换抓取配置。

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

# 如果 Service annotation 指定了 prometheus.io/path,则替换抓取配置。

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

# 如果 Service annotation 指定了 prometheus.io/port,则替换抓取配置。

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

# 匹配 Service 的所有标签

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

# 匹配 Service 的命名空间,将其值替换到目标标签中

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace

# 匹配 Service 的名称,将其值替换到目标标签中

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service

- job_name: "kubernetes-apiservers"

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels:

[

__meta_kubernetes_namespace,

__meta_kubernetes_service_name,

__meta_kubernetes_endpoint_port_name,

]

action: keep

regex: default;kubernetes;https

- job_name: "kubernetes-nodes"

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: "kubernetes-cadvisor"

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

# 适配 https://grafana.com/grafana/dashboards/13105 仪表盘

metric_relabel_configs:

- source_labels: [instance]

separator: ;

regex: (.+)

target_label: node

replacement: $1

action: replace

# Service endpoint 的抓取配置

- job_name: "kubernetes-service-endpoints"

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

# 仅抓取 annotation 含 prometheus.io/scraped 并且值为 true 的 Service endpoint。

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scraped]

action: keep

regex: true

# 如果 Service annotation 指定了 prometheus.io/scheme,则替换抓取配置。

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

# 如果 Service annotation 指定了 prometheus.io/path,则替换抓取配置。

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

# 如果 Service annotation 指定了 prometheus.io/port,则替换抓取配置。

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

# 匹配 Service 的所有标签

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

# 匹配 Service 的命名空间,将其值替换到目标标签中

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace

# 匹配 Service 的名称,将其值替换到目标标签中

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service

# 通过 Blackbox Exporter 探测 Servcie 的抓取配置

- job_name: "kubernetes-services"

metrics_path: /probe

params:

module: [http_2xx]

kubernetes_sd_configs:

- role: service

relabel_configs:

# 仅抓取 annotation 含 prometheus.io/probed 并且值为 true 的 Service。

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probed]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.monitor.svc:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: service

# 通过 Blackbox Exporter 探测 Ingress 的抓取配置。

- job_name: "kubernetes-ingresses"

metrics_path: /probe

params:

module: [http_2xx]

kubernetes_sd_configs:

- role: ingress

relabel_configs:

# 仅抓取 annotation 含 prometheus.io/probed 并且值为 true 的 Ingress。

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probed]

action: keep

regex: true

- source_labels:

[

__meta_kubernetes_ingress_scheme,

__address__,

__meta_kubernetes_ingress_path,

]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.monitor.svc:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: ingress

# Pod 的抓取配置

- job_name: "kubernetes-pods"

kubernetes_sd_configs:

- role: pod

relabel_configs:

# 仅抓取 annotation 含 prometheus.io/scraped 并且值为 true 的 Pod。

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scraped]

action: keep

regex: true

# 如果 Pod annotation 指定了 prometheus.io/path,则替换抓取配置。

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

# 如果 Pod annotation 指定了 prometheus.io/port,则替换抓取配置。

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

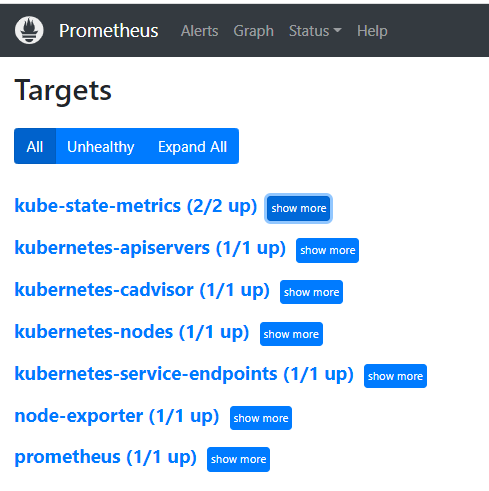

target_label: pod配置好后重启,登录到 prometheus,查看 targets。

七、Grafana

grafana.yaml:

apiVersion: v1

kind: PersistentVolume

metadata:

name: grafana-pv

labels:

app: grafana

spec:

capacity:

storage: 20Gi

accessModes: ["ReadWriteOnce"]

persistentVolumeReclaimPolicy: Retain

storageClassName: local

local:

path: /test-data/grafana

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cp

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-pvc

namespace: monitor

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 20Gi

storageClassName: local

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: monitor

labels:

app: grafana

annotations:

prometheus.io/scraped: "true"

spec:

ports:

- port: 3000

nodePort: 30003

protocol: TCP

targetPort: http-grafana

selector:

app: grafana

type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: monitor

labels:

app: grafana

spec:

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

securityContext:

fsGroup: 472

supplementalGroups:

- 0

containers:

- name: grafana

image: grafana/grafana:8.4.4

ports:

- containerPort: 3000

name: http-grafana

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /robots.txt

port: 3000

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 30

successThreshold: 1

timeoutSeconds: 2

livenessProbe:

failureThreshold: 3

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

tcpSocket:

port: 3000

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 500Mi

volumeMounts:

- mountPath: /var/lib/grafana

name: grafana-pv

volumes:

- name: grafana-pv

persistentVolumeClaim:

claimName: grafana-pvc配置数据源:

导入仪表盘:

k8s 集群监控推荐:https://grafana.com/grafana/dashboards/13105

节点监控推荐:https://grafana.com/grafana/dashboards/16098